|

市場調查報告書

商品編碼

1850240

深度學習:市場佔有率分析、行業趨勢、統計數據和成長預測(2025-2030 年)Deep Learning - Market Share Analysis, Industry Trends & Statistics, Growth Forecasts (2025 - 2030) |

||||||

※ 本網頁內容可能與最新版本有所差異。詳細情況請與我們聯繫。

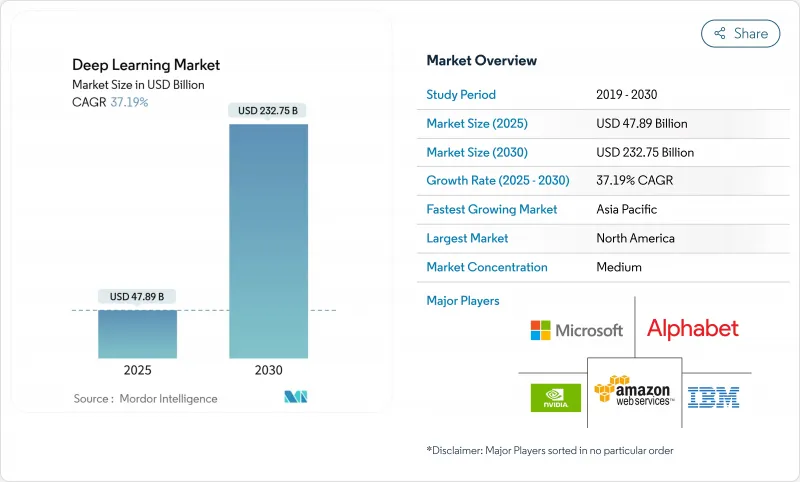

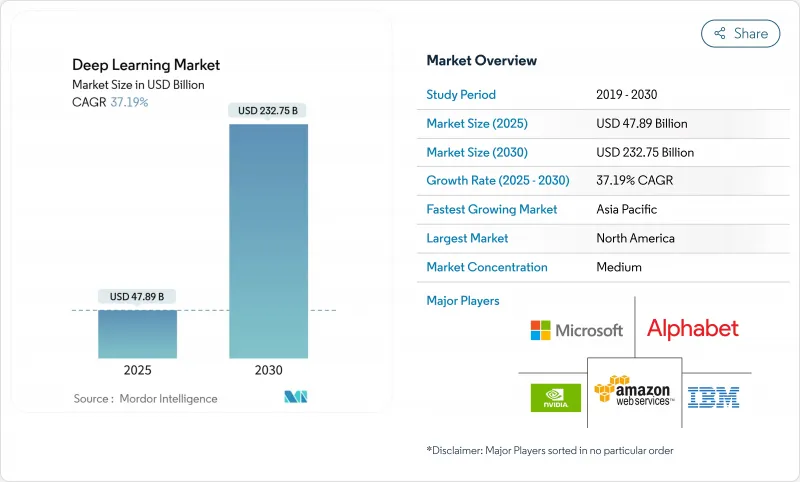

預計到 2025 年,深度學習市場規模將達到 478.9 億美元,到 2030 年將達到 2,327.5 億美元,年複合成長率為 37.19%。

硬體加速器如今能夠以更低的延遲提供更大規模的模型,而變壓器的突破性進展正在加速各行各業的採用。金融機構、醫院、製造商和零售商正在將神經網路直接嵌入到工作流程中,而不是將其束之高閣於實驗室。硬體供應商、雲端平台和軟體專家正在建立新的聯盟,以加快企業用戶的部署速度。同時,能源消耗、監管和技能短缺等問題正在限制規模化擴展的速度。

全球深度學習市場趨勢與洞察

非結構化資料的爆炸性成長

企業每天產生 2.5 億位元組的訊息,其中約 80% 仍為非結構化資料。光學神經網路處理器如今的運算速度已達到每秒 1.57 千兆次運算,從而能夠為自主系統和工業監控提供即時視訊、語音和文字分析。金融機構報告稱,衛星影像和社群媒體情緒等替代資料饋送的數量增加了 300%,這需要能夠關聯不同資料來源的專用模型。邊緣運算的採用率年增 34%,其驅動力來自批次分析向低延遲推理的轉變。由此產生的回饋循環在提高模型精度的同時,也擴展了模型能夠支援的工作負載。

AI加速器成本下降,效能大幅提升。

先進的3奈米製程製程、堆疊式HBM記憶體和光子互連技術每年可降低40%的運算成本。 NVIDIA的Blackwell Ultra顯示卡效能比上一代產品提升1.5倍。 AMD的MI350系列顯示卡吞吐量比上一代晶片提升35倍。這些突破性進展使中型企業能夠在單節點系統上運行1000億個參數模型,而無需分散式叢集。降低資本支出有助於擴大客戶群、縮短採購週期,並將硬體打造為成長最快的市場領域。

高能耗和冷氣成本

預計到2025年,人工智慧叢集的能耗將達到46-82太瓦時(TWh),2030年將成長至1,050太瓦時。目前,每次訓練運作需要消耗1兆瓦時的電力,而一機架GPU需要40-140千瓦的電力,相較之下,一台普通伺服器僅需10千瓦。直接液冷和浸沒式液冷會增加15-20%的資本成本,而可再生能源供應的不穩定性也帶來了可靠性方面的挑戰。目前,能源成本佔人工智慧總擁有成本的40%之多,這迫使買家在擴展規模之前必須考慮電費和碳排放目標。

細分市場分析

預計到2030年,硬體市場將以37.5%的複合年成長率成長,主要受GPU、客製化ASIC和晶圓級引擎需求的驅動。 NVIDIA的GB10 Grace Blackwell超級晶片驅動著一款售價3000美元的個人AI工作站,該工作站能夠處理2000億個參數模型。 Cerebras Systems已在晶圓級平台上實現了每秒1500個代幣的推理速度,比傳統GPU叢集快57倍。通訊業者、汽車OEM廠商和雲端服務供應商正在採用這些加速器來減少佔地面積和消費量。新興企業則利用資本支出減少的優勢,快速開發垂直產業解決方案原型,並加快特定產業應用的上市速度。

軟體和服務仍然佔據收入的大部分,因為經常性訂閱、託管平台和整合計劃能夠產生可預測的現金流。醫療保健、金融和製造業等垂直行業的基礎架構模式推動了對服務的需求,因為客戶需要專業知識。雲端供應商將編配工具捆綁到其模型即服務 (MaaS) 產品中,使企業無需管理基礎設施。儘管硬體的成長率更高,但客製化服務仍然保持兩位數的成長,並且需要諮詢服務。硬體創新和軟體貨幣化之間的共生關係正在推動深度學習市場實現均衡擴張。

到2024年,銀行、金融服務和保險(BFSI)產業將佔據深度學習市場24.5%的佔有率,這主要得益於詐欺偵測、風險建模和演算法交易等技術的應用。一家領先銀行整合了基於變壓器的客戶服務代理,能夠在首次聯繫時解決70%的客戶諮詢,從而提升客戶滿意度並降低成本。支付網路則將異常偵測技術應用於串流數據,以在毫秒時間內攔截非法貿易。

醫療保健和生命科學領域的診斷核准數量激增,複合年成長率高達 38.3%,成為成長最快的領域。過去需要人工審核的放射科工作流程現在可以即時分診,基因組分析師利用基礎模型在數週內(而非數月)識別出有前景的藥物標靶。醫院正在採用保護隱私的聯邦學習技術來保護病患記錄,並滿足監管機構和保險公司的要求。製藥公司正在投資人工智慧主導的蛋白質折疊模擬工具,以加速臨床試驗進程。這一發展勢頭使醫療保健成為深度學習市場的重要收入引擎。

深度學習市場按產品類型(硬體、軟體、服務)、終端用戶行業(銀行、金融服務和保險、零售和電子商務、製造業及其他)、應用類型(圖像和視訊識別、語音語音辨識、自然語言處理和文字分析及其他)、部署類型(雲端、本地部署)以及地區進行細分。市場預測以美元計價。

區域分析

到2024年,北美將佔據深度學習市場32.5%的佔有率。隨著台積電投資1,650億美元擴建其位於亞利桑那州的晶圓廠以降低供應鏈風險,北美半導體製造業正在擴張。加拿大將利用其卓越的研究實力扶持自然語言處理(NLP)新創企業,而墨西哥將成為人工智慧硬體的近岸組裝中心。區域電網,尤其是維吉尼亞和德克薩斯州的電網,難以容納高達140千瓦的機架,這促使公用事業公司加快採用可再生能源。

亞太地區是成長最快的地區,預計複合年成長率將達到37.2%。印度建立國家人工智慧中心並為Start-Ups提供運算資源補貼,正在推動金融科技和農業技術解決方案的蓬勃發展。日本正利用其機器人技術優勢,將服務型機器人商業化,以應對人口老化問題;韓國則將其5G領先地位與智慧工廠中邊緣人工智慧的部署相結合。澳洲正在試驗自動駕駛礦用卡車,東南亞的電商公司正在將其建議引擎應用於龐大的行動消費群。這些多樣化的應用場景支撐著整個地區對深度學習解決方案的持續需求。

歐洲已頒布歐盟人工智慧法,該法規定,不遵守者將被處以高達全球營業額3%的罰款。儘管面臨合規挑戰,歐洲仍在穩步推動人工智慧發展。德國汽車製造商正在將可解釋人工智慧整合到電動車中,用於安全關鍵的感知;義大利機械製造商則在應用預測性維護分析技術。北歐國家正利用水力和風能資源為資料中心供電,並銷售碳中和的人工智慧服務,以滿足具有永續性意識的客戶的需求。英國在脫歐後實行靈活的框架,吸引尋求進入歐洲和英聯邦市場的美國和亞洲公司。總而言之,這些動態共同作用,使歐洲成為負責任、節能型市場成長的中心。

其他福利:

- Excel格式的市場預測(ME)表

- 3個月的分析師支持

目錄

第1章 引言

- 研究假設和市場定義

- 調查範圍

第2章調查方法

第3章執行摘要

第4章 市場情勢

- 市場概覽

- 市場促進因素

- 非結構化資料的爆炸性成長

- 人工智慧加速器的成本正在下降,效能也在顯著提升。

- 消費級深度學習整合(語音、視覺、物聯網)

- 醫學影像診斷技術的快速普及

- 垂直平台模式,用於開發利基市場

- 邊緣/裝置內下載,實現隱私保護和超低延遲

- 市場限制

- 高能耗和冷氣成本

- 缺乏專業的駕駛執照人員

- 加強全球人工智慧監管(例如歐盟人工智慧法)

- 培訓資料的知識產權/版權責任

- 供應鏈分析

- 監管環境

- 技術展望

- 波特五力分析

- 供應商的議價能力

- 買方的議價能力

- 新進入者的威脅

- 替代品的威脅

- 競爭對手之間的競爭

- 評估市場中的宏觀經濟因素

第5章 市場規模與成長預測

- 按產品/服務細分

- 硬體

- 軟體和服務

- 最終用戶行業細分

- BFSI

- 零售與電子商務

- 製造業

- 醫療保健和生命科學

- 汽車與運輸

- 通訊與媒體

- 安全與監控

- 其他

- 應用分段

- 圖像和影片識別

- 語音辨識

- 自然語言處理和文本分析

- 自主系統與機器人

- 預測分析與預測

- 其他用途

- 依部署模式進行分段

- 雲

- 本地部署

- 區域細分

- 北美洲

- 美國

- 加拿大

- 墨西哥

- 南美洲

- 巴西

- 阿根廷

- 其他南美洲

- 歐洲

- 德國

- 英國

- 法國

- 義大利

- 西班牙

- 俄羅斯

- 其他歐洲地區

- 亞太地區

- 中國

- 日本

- 印度

- 韓國

- 澳洲

- 亞太其他地區

- 中東和非洲

- 中東

- 沙烏地阿拉伯

- 阿拉伯聯合大公國

- 土耳其

- 其他中東地區

- 非洲

- 南非

- 奈及利亞

- 埃及

- 其他非洲地區

- 北美洲

第6章 競爭情勢

- 市場集中度

- 策略趨勢

- 市佔率分析

- 公司簡介

- NVIDIA Corporation

- Google LLC(Alphabet)

- Amazon Web Services, Inc.

- Microsoft Corporation

- IBM Corporation

- Meta Platforms, Inc.

- Intel Corporation

- Advanced Micro Devices, Inc.

- SAS Institute Inc.

- RapidMiner, Inc.

- Baidu, Inc.

- Qualcomm Technologies, Inc.

- Huawei Technologies Co., Ltd.

- Graphcore Ltd.

- Cerebras Systems, Inc.

- Xilinx(part of AMD)

- Samsung Electronics Co., Ltd.

- Oracle Corporation

- H2O.ai

- Databricks, Inc.

- SenseTime Group

- OpenAI LP

- Tesla, Inc.

- NEC Corporation

- Darktrace plc

第7章 市場機會與未來展望

The deep learning market size is estimated at USD 47.89 billion in 2025 and is projected to reach USD 232.75 billion by 2030, advancing at a 37.19% CAGR.

Hardware accelerators now deliver larger models at lower latencies, while transformer breakthroughs accelerate adoption across every industry. Financial institutions, hospitals, manufacturers, and retailers embed neural networks directly into workflows instead of confining them to research labs. Hardware vendors, cloud platforms, and software specialists form new alliances that reduce time-to-deployment for enterprise buyers. At the same time, energy use, regulatory scrutiny, and skills shortages challenge the pace of scale-out.

Global Deep Learning Market Trends and Insights

Explosive Growth in Unstructured Data Volumes

Every day enterprises generate 2.5 quintillion bytes of information, and roughly 80% of that data remains unstructured. Optical neural processors now reach 1.57 peta-operations per second, enabling real-time video, audio, and text analysis for autonomous systems and industrial monitoring. Financial institutions report a 300% increase in alternative data feeds, including satellite imagery and social sentiment, which demands specialized models able to correlate disparate sources. Edge computing deployments rise 34% year over year as firms shift from batch analytics to low-latency inference. The resulting feedback loop boosts model accuracy while expanding addressable workloads.

Declining Cost and Performance Leap of AI Accelerators

Advanced 3-nanometer designs, stacked HBM memory, and photonic interconnects push compute costs down by 40% annually. NVIDIA's Blackwell Ultra delivers 1.5X performance over its prior generation. AMD's MI350 series posts 35X throughput gains versus earlier chips . These leaps allow mid-market companies to run 100-billion-parameter models on single-node systems instead of distributed clusters. Lower capital outlays widen the customer base and shorten procurement cycles, turning hardware into the fastest-growing deep learning market segment.

High Energy Footprint and Cooling Costs

AI clusters are projected to consume 46-82 TWh in 2025 and could rise to 1,050 TWh by 2030. Individual training runs now draw megawatt-hours of power, and racks outfitted for GPUs require 40-140 kW versus 10 kW for typical servers. Direct-liquid and immersion cooling add 15-20% to capital costs, while fluctuating renewable supply creates reliability challenges. Energy now represents up to 40% of total AI ownership costs, forcing buyers to weigh electricity tariffs and carbon objectives before scaling.

Other drivers and restraints analyzed in the detailed report include:

- Consumer-Grade DL Integration

- Medical-Imaging and Diagnostics Adoption Surge

- Scarcity of Specialized DL Talent

For complete list of drivers and restraints, kindly check the Table Of Contents.

Segment Analysis

Hardware posted a 37.5% CAGR forecast through 2030, propelled by demand for GPUs, custom ASICs, and wafer-scale engines. NVIDIA's GB10 Grace Blackwell superchip powers personal AI stations priced at USD 3,000 that can handle 200-billion-parameter models . Cerebras Systems demonstrates inference at 1,500 tokens per second on its wafer-scale platform, representing a 57-fold speed improvement over legacy GPU clusters. Telecommunication operators, automotive OEMs, and cloud providers adopt these accelerators to shrink floor space and energy consumption. Start-ups leverage lower capex to prototype vertical solutions, narrowing time-to-market for industry-specific applications.

Software and Services still command most revenues because recurring subscriptions, managed platforms, and integration projects generate predictable cash-flows. Vertical foundation models for healthcare, finance, and manufacturing drive service demand as clients seek domain expertise. Cloud vendors bundle model-as-a-service offerings with orchestration tools, letting enterprises avoid infrastructure management. Customization mandates consulting help, sustaining double-digit growth even as hardware outpaces in percentage terms. The symbiosis between hardware innovation and software monetization ensures balanced expansion across the deep learning market.

BFSI controlled 24.5% of deep learning market share in 2024, leveraging fraud detection, risk modeling, and algorithmic trading. Large banks integrate transformer-based customer-service agents that resolve 70% of queries on first contact, raising satisfaction scores and trimming costs. Payment networks embed anomaly detection on streaming data to block fraudulent transactions within milliseconds.

Healthcare and Life Sciences display the fastest 38.3% CAGR as diagnostic approvals surge. Radiology workflows that once required manual review now achieve instant triage, while genomic analysts deploy foundation models to identify promising drug targets in weeks instead of months. Hospitals adopt privacy-preserving federated learning to safeguard patient records, satisfying regulators and insurance providers. Pharmaceutical firms invest in AI-driven protein-folding and simulation tools, accelerating clinical trial timelines. This momentum positions healthcare as a pivotal revenue engine for the deep learning market.

Deep Learning Market is Segmented by Offering (Hardware, Software and Services), End User Industry (BFSI, Retail and ECommerce, Manufacturing, and More), Application (Image and Video Recognition, Speech and Voice Recognition, NLP and Text Analytics and More), Deployment (Cloud, On-Premises) and by Geography. The Market Forecasts are Provided in Terms of Value (USD).

Geography Analysis

North America held 32.5% of the deep learning market in 2024, semiconductor fabrication expands domestically as TSMC invests USD 165 billion in Arizona plants, reducing supply-chain risk. Canada capitalizes on research excellence to spin out NLP start-ups, while Mexico becomes a near-shore assembly base for AI hardware. Regional energy grids, especially in Virginia and Texas, struggle to accommodate racks drawing up to 140 kW, prompting utilities to accelerate renewable capacity.

Asia-Pacific is the fastest climber with a 37.2% CAGR forecas. India implements national AI centers that offer subsidized compute credits to start-ups, spawning a wave of fintech and agritech solutions. Japan leverages robotics heritage to commercialize service robots for aging populations, while South Korea couples 5G leadership with edge AI deployments in smart factories. Australia experiments with autonomous mining trucks, and Southeast Asian e-commerce firms apply recommendation engines to vast mobile consumer bases. The diversity of use cases underpins sustained regional demand for deep learning solutions.

Europe advances at a steady pace despite compliance overhead from the EU AI Act, which can impose fines up to 3% of global turnover for violations. German automakers integrate explainable AI for safety-critical perception in electric vehicles, while Italian machinery makers embed predictive maintenance analytics. Nordic countries power data centers with hydro and wind resources, marketing carbon-neutral AI services that appeal to sustainability-minded clients. The United Kingdom operates a flexible post-Brexit framework, attracting US and Asian firms seeking access to both European and Commonwealth markets. Collectively, these dynamics position Europe as a hub for responsible and energy-efficient deep learning market growth.

- NVIDIA Corporation

- Google LLC (Alphabet)

- Amazon Web Services, Inc.

- Microsoft Corporation

- IBM Corporation

- Meta Platforms, Inc.

- Intel Corporation

- Advanced Micro Devices, Inc.

- SAS Institute Inc.

- RapidMiner, Inc.

- Baidu, Inc.

- Qualcomm Technologies, Inc.

- Huawei Technologies Co., Ltd.

- Graphcore Ltd.

- Cerebras Systems, Inc.

- Xilinx (part of AMD)

- Samsung Electronics Co., Ltd.

- Oracle Corporation

- H2O.ai

- Databricks, Inc.

- SenseTime Group

- OpenAI LP

- Tesla, Inc.

- NEC Corporation

- Darktrace plc

Additional Benefits:

- The market estimate (ME) sheet in Excel format

- 3 months of analyst support

TABLE OF CONTENTS

1 INTRODUCTION

- 1.1 Study Assumptions and Market Definition

- 1.2 Scope of the Study

2 RESEARCH METHODOLOGY

3 EXECUTIVE SUMMARY

4 MARKET LANDSCAPE

- 4.1 Market Overview

- 4.2 Market Drivers

- 4.2.1 Explosive growth in unstructured data volumes

- 4.2.2 Declining cost and performance leap of AI accelerators

- 4.2.3 Consumer?grade DL integration (voice, vision, IoT)

- 4.2.4 Medical-imaging and diagnostics adoption surge

- 4.2.5 Vertical foundation models unlocking niche markets

- 4.2.6 Edge/on-device DL for privacy and ultra-low latency

- 4.3 Market Restraints

- 4.3.1 High energy footprint and cooling costs

- 4.3.2 Scarcity of specialised DL talent

- 4.3.3 Tightening global AI regulation (e.g., EU AI Act)

- 4.3.4 IP/copyright liability for training data

- 4.4 Supply-Chain Analysis

- 4.5 Regulatory Landscape

- 4.6 Technological Outlook

- 4.7 Porter's Five Force Analysis

- 4.7.1 Bargaining Power of Suppliers

- 4.7.2 Bargaining Power of Buyers

- 4.7.3 Threat of New Entrants

- 4.7.4 Threat of Substitutes

- 4.7.5 Intensity of Competitive Rivalry

- 4.8 Assesment of Macroeconomic Factors on the market

5 MARKET SIZE AND GROWTH FORECASTS (VALUE)

- 5.1 Segmentation by Offering

- 5.1.1 Hardware

- 5.1.2 Software and Services

- 5.2 Segmentation by End-user Industry

- 5.2.1 BFSI

- 5.2.2 Retail and eCommerce

- 5.2.3 Manufacturing

- 5.2.4 Healthcare and Life Sciences

- 5.2.5 Automotive and Transportation

- 5.2.6 Telecom and Media

- 5.2.7 Security and Surveillance

- 5.2.8 Other Applications

- 5.3 Segmentation by Application

- 5.3.1 Image and Video Recognition

- 5.3.2 Speech and Voice Recognition

- 5.3.3 NLP and Text Analytics

- 5.3.4 Autonomous Systems and Robotics

- 5.3.5 Predictive Analytics and Forecasting

- 5.3.6 Other Applications

- 5.4 Segmentation by Deployment Mode

- 5.4.1 Cloud

- 5.4.2 On-Premise

- 5.5 Segmentation by Geography

- 5.5.1 North America

- 5.5.1.1 United States

- 5.5.1.2 Canada

- 5.5.1.3 Mexico

- 5.5.2 South America

- 5.5.2.1 Brazil

- 5.5.2.2 Argentina

- 5.5.2.3 Rest of South America

- 5.5.3 Europe

- 5.5.3.1 Germany

- 5.5.3.2 United Kingdom

- 5.5.3.3 France

- 5.5.3.4 Italy

- 5.5.3.5 Spain

- 5.5.3.6 Russia

- 5.5.3.7 Rest of Europe

- 5.5.4 Asia-Pacific

- 5.5.4.1 China

- 5.5.4.2 Japan

- 5.5.4.3 India

- 5.5.4.4 South Korea

- 5.5.4.5 Australia

- 5.5.4.6 Rest of Asia-Pacific

- 5.5.5 Middle East and Africa

- 5.5.5.1 Middle East

- 5.5.5.1.1 Saudi Arabia

- 5.5.5.1.2 United Arab Emirates

- 5.5.5.1.3 Turkey

- 5.5.5.1.4 Rest of Middle East

- 5.5.5.2 Africa

- 5.5.5.2.1 South Africa

- 5.5.5.2.2 Nigeria

- 5.5.5.2.3 Egypt

- 5.5.5.2.4 Rest of Africa

- 5.5.1 North America

6 COMPETITIVE LANDSCAPE

- 6.1 Market Concentration

- 6.2 Strategic Moves

- 6.3 Market Share Analysis

- 6.4 Company Profiles (includes Global level Overview, Market level overview, Core Segments, Financials as available, Strategic Information, Market Rank/Share for key companies, Products and Services, and Recent Developments)

- 6.4.1 NVIDIA Corporation

- 6.4.2 Google LLC (Alphabet)

- 6.4.3 Amazon Web Services, Inc.

- 6.4.4 Microsoft Corporation

- 6.4.5 IBM Corporation

- 6.4.6 Meta Platforms, Inc.

- 6.4.7 Intel Corporation

- 6.4.8 Advanced Micro Devices, Inc.

- 6.4.9 SAS Institute Inc.

- 6.4.10 RapidMiner, Inc.

- 6.4.11 Baidu, Inc.

- 6.4.12 Qualcomm Technologies, Inc.

- 6.4.13 Huawei Technologies Co., Ltd.

- 6.4.14 Graphcore Ltd.

- 6.4.15 Cerebras Systems, Inc.

- 6.4.16 Xilinx (part of AMD)

- 6.4.17 Samsung Electronics Co., Ltd.

- 6.4.18 Oracle Corporation

- 6.4.19 H2O.ai

- 6.4.20 Databricks, Inc.

- 6.4.21 SenseTime Group

- 6.4.22 OpenAI LP

- 6.4.23 Tesla, Inc.

- 6.4.24 NEC Corporation

- 6.4.25 Darktrace plc

7 MARKET OPPORTUNITIES AND FUTURE OUTLOOK

- 7.1 White-space and Unmet-Need Assessment